KIT208 Assignment 5 Report_Group 4

Author:

Jigme Nidup Tenzin (559630)

Anh Quan Doan (624501)

Matthew Laughlin (620636)

Introduction

Elevator pitch:

Have you ever spent hours moving the interior back and forth in order to figure out the best look for the house design while physically drained? This virtual reality designer training application will allow you to move the interior around your design effortlessly with different designing scenes to turn you into an interior design expert without breaking a sweat.

Revision since Assignment 4:

- Added 3D model of the lamp.

- Introduced the sound effect when interacting with object and background sound.

- Added the timer to the user’s menu

- Debugged character movement.

- Added the light effect from 3D model (a lamp) and from the environment (day and night).

Technical Development

Overall, the development of this prototype focuses on enhancing the realistic features of the application by implementing sound effects to improve the responsiveness of the application when used, lighting effects from 3D objects such as the lamb 3D model within the house, and from the environment (day and night). Furthermore, to enhance the difficulty of the application, we added the timer when using the design application to further improve the learning pressure. The user menu interaction is improved to incorporate other functionality of the timer as well as changing the day and light effect of the environment (by changing the skybox material).

Timer on the menu:

Timer.cs: This script manages the timer that appears on the application menu where the variables track time (“timeTaken”) and the state of the timer (“paused”). The time is recorded and continuously shows on the menu by using the “Update()” method which records the time when not paused and updates the time constantly through a Text Mesh Pro (TMP) Text component. The script controls the timer’s operation by changing the state of the Boolean variable “paused” (true or false) to start or pause the timer with the user’s interaction.

General Menu and Object Interactions:

DetectButtonForPickup.cs: This C# script manages all button input and holds the code for what happens if a menu item is selected. It monitors button presses to move and scale objects, shows a menu, and reacts to menu choices. The script also allows for scene switching, controls the start, pause, and reset functions of a timer, and allows teleportation to various locations. It also switches between day and night modes by modifying the lighting and materials of the skybox. It makes sure the menu is hidden and resets when an action is taken on a selected menu item. This script is essential to the Unity game's rich interactive experience.

- The “Start()” method initializes references of components and game objects.

- The “Update()” method is used to execute functions according to the user’s input.

- For the picking up function, it checks for the trigger from the controller and calls the method “grabItem()” from “DetectCollisionForPickup.cs” to execute the pickup function.

- For the putting down function, it checks if the trigger button is released and if the 3D object is held then it will calls “dropItem()” to execute the put item down function.

- For the scaling 3D game objects function, it controls the “X” and “Y” button of the controller to scale up and scale down the size of the object.

- Displaying the menu is controlled when the user presses the right trigger to bring up the item and location menu. If an item on the menu is selected then it will initiate the prefab to locate it in the scene. If the user chooses the locations to teleport to then it will execute the LoadScene() method to switch to that scene accordingly. It also controls the toggling of the lighting and sound effecst of the environment by changing the skybox material as well as the timer on the menu with various timer functions.

DetectingCollisionWithFurnitureMenu.cs: This script handles the task of keeping track of the currently selected menu item and highlighting it when a collision is detected. The major variables in the script are selectedMenuItemMaterial (defines the material that is applied when a menu item is selected and creates a visual highlight) and defaultMenuItemMaterial (stores the default material of a menu item, and menuItemCollidedwith, which tracks the currently collided menu item). When an object collides with a trigger collider, the OnTriggerEnter() method is called to determine whether the colliding object has the "MenuItem" tag on it. If a menu item was previously selected, it updates menuItemCollidedwith to the newly collided menu item, resets the previously selected menu item's material to the default material, and modifies the material of the highlighted menu item.

DetectCollisionForPickup.cs: This script keeps track of the holding interactable game objects when using the controller to grab and release their objects. It also ensures that only one object is held at a time to avoid any potential error. The OnTriggerEnter() method is called when 2 colliders collide with each other in a scene where it checks for the “Grabable” tag to list the item as interactable and vice versa for the OnTriggerExit() method. The grabItem() method is the main script that allows the user to hold a game object that is executed when the condition of the if statement is met where it confirms that at least one object is within reach in the currentyCorridedWith list (currentyCorridedWith[0]!= null) and that there isn't a currently held item (grabedItem == null). The method changes the item's parent to the anchor, thereby connecting it to the hand or controller for manipulation, and assigns the first item in the currentyCorridedWith list to the grabedItem variable, designating it as the currently held item, if both conditions are satisfied. On the other hand, the player can release the held item by using the dropItem() method. In order to assign grabedItem as null, which indicates that no item is currently held, it unparents the grabedItem and sets its parent to null.

Lamp interaction:

LampScript.cs: This script controls the Lamp 3D model, enabling it to be turned on and off. When the lamp is on, a "litMaterial" is applied to simulate the light effect. When the lamp is off, the default materials for its components are kept in the "defaultMaterials" array. To detect interactions and control the lamp's light source, a "pointLight" GameObject and a reference to the player's right hand ("rightHand") are utilized. The "changeMaterial()" function toggles the lamp state. The "pointLight" is turned on or off by the iteration through "lampComponents" and material switching. When the right hand enters the trigger zone of the lamp, the "OnTriggerEnter()" method is triggered, which in turn triggers the "changeMaterial()" method. By iterating through the "lampComponents" and switching materials, it turns the "pointLight" on and off as necessary. The "changeMaterial()" method is triggered when the right hand enters the trigger zone of the lamp, as per the "OnTriggerEnter(Collider collider)" method. Using the original materials, the script initializes the "defaultMaterials" array in "Start()". With the help of this script, the player can modify the lamp's appearance and simulate lighting, adding to the overall game environment.

Point teleportation:

SimpleTeleportBehaviour.cs: This script allows the user to teleport to a certain location within the scene where they can aim for the location to teleport to using the Oculus controller. For aiming for the teleporting location, when the user aims at a certain place using the “A” button, the script will call the teleporter.ToggleDisplay(true) to show the graphic of the aim as well as setting the “isAiming” to “false”. Upon releasing the aim button from the controller. The teleporter.ToggleDisplay(false) is called to hide the arrow graphic and change “isAiming” to false. When the teleport button “B” is pressed with the “A” button (teleportation is only allowed when “isAiming” is true), the user will be teleported using teleporter.Teleport() method.

Player Movement:

PlayerMovement.cs: The script is designed for 3D player movement by utilizing input from an Oculus VR controller. The "rightStick()" method controls player rotation by using input from the right thumbstick, whereas the "leftStick()" method controls movement based on input from the left thumbstick, including acceleration and deceleration factors. While "rightStick()" is currently deactivated, the "Update()" method calls "leftStick()" to allow player movement.

Audio Script:

AudioManager.cs: This script is responsible to manager the background music and sound effect when interacting the objects. The "Awake()" method ensures that the background music continues to play without interruption or restarting when you switch scenes using DontDestroyOnLoad() function. The "Start()" function initiates the background music, and "PlaySFX()" is the function where you trigger the playback of the sound clips.

3D Models

Since the theme of this application surrounds interior design, all of the 3D models/scenes used in this application are related to furniture and housing which could be easily obtained from online assets. The models were selected based on realistic features that would most closely mimic that certain object in the real-world, including the house models and other furniture models.

Furnitures 3D models used: Sofas, 2 seats sofas, round-table, big wardrobe and bed

- These models were essential for interior design as these items are commonly used furniture in common household, so using these models were important for this application. Within this application, these models can be imported from a user menu upon interaction. These items are interactable or grabbable from the user as they can hold it will the controller as well as scale them with various sizes.

House: This 3D model of the house represents a typical house design in the real-world to simulate the interior design within a house experience where there are different rooms and floors within the 3D models. Within this application, is the main environment that the user will interact in with other objects. There are 2 separate scenes for the user to switch where the design training part is mainly located within the house and the user can switch to another scene to view the outside of the house.

Lamp: This model of the lamp represents a real-world lamp where it could be turned on/off with its lighting features. This model was used to showcase the artificial lighting effect within the house where it could affect the interior design experience, hence, enhancing the immersive experience of this application via lighting effect. Within this application, the light of the lamp could be toggled on and off upon interaction via the Oculus controller.

Usability Testing

Design and Plan

The goal of the initial usability test for this early development stage was to justify the use of VR applications with a range of interactions that mimic interior design training in the real-world studio while maintaining a physical effortless experience for the user when using technology. The testing focused on the immersive experience and comfort of the user when using this VR application as well as the user’s preferences in using methods for interior training.

It was decided to have an in-person testing methodology using the VR headset for an administrator to capture the live experience of the user (Interaction Design Foundation 2016). The participants were asked to use the application by wearing the Oculus VR headset where the application would be loaded from the developer’s computer (usability.gov 2023).

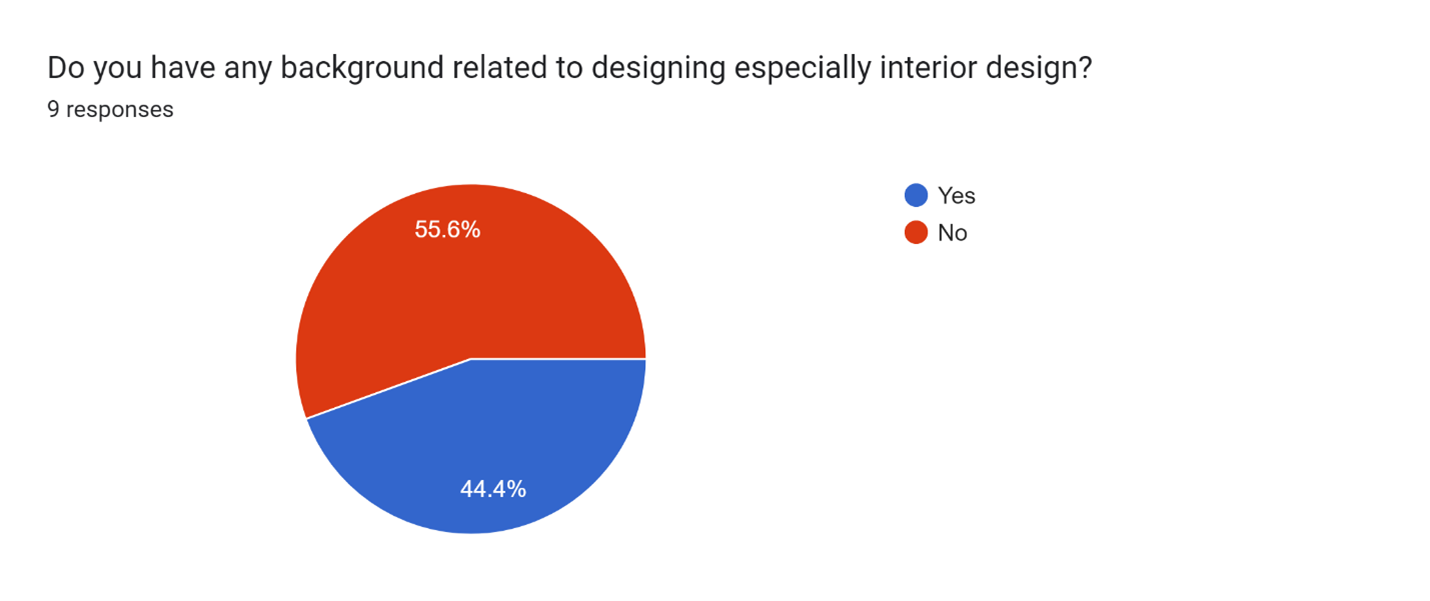

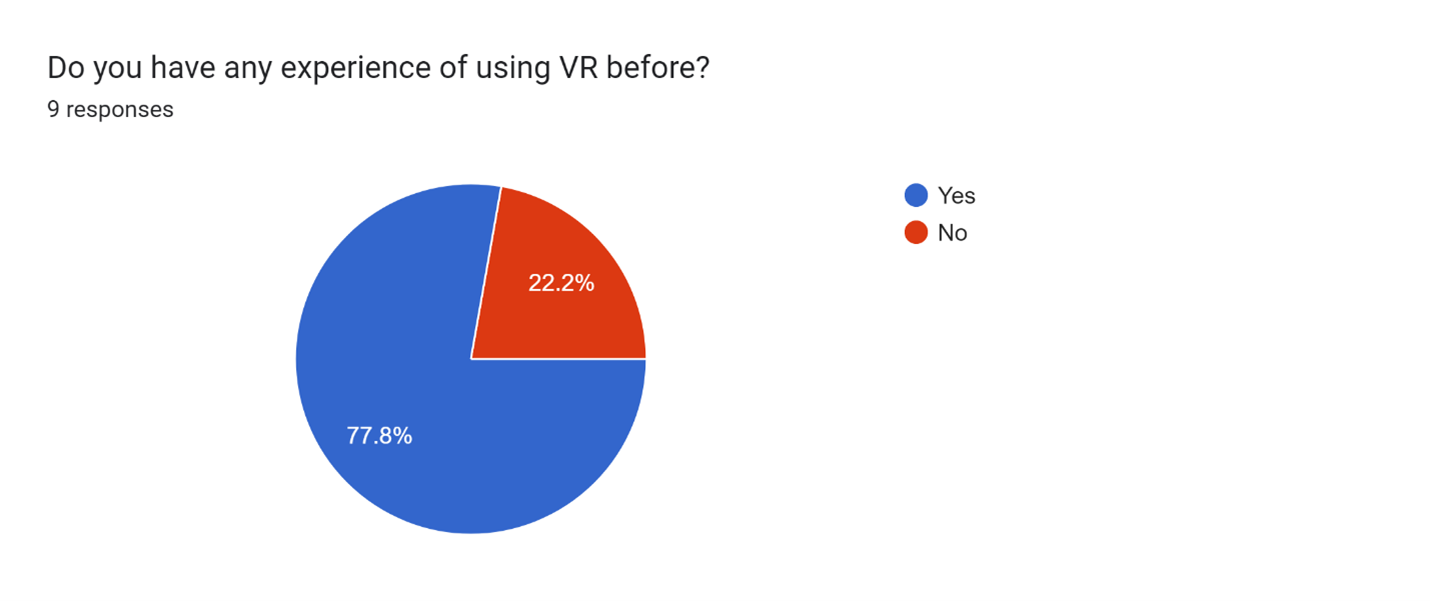

To collect experiment results, participants completed a survey divided into pre-experiment and post-experiment questions. Pre-experiment questions aimed to determine participants' background in interior design and prior experience with VR technology. Post-experiment questions assessed their experience with the application. This usability test, in accordance with usability.gov (2013), provided valuable insights for the development team.

Recruitment

Ideally, to enhance the examination results, it would involve experimenting with a diverse group of people, particularly those with a background in design (the target audience). This would be important for assessing the accessibility of the application, especially among users with physical or cognitive disadvantages (NSW Government n.d). However, due to time constraints during the 19-day development phase of the project and the developers' educational commitments, the team was only able to gather test results from family and friends with varied backgrounds. Despite requesting honesty, a degree of bias was introduced into the study, affecting both its reliability and validity. The limited participation (only 9 responses) from individuals without specific interior design backgrounds resulted in a less comprehensive experiment.

Protocol

Each team member would carry the headset and their laptop with the application to different locations of the participants to conduct the test. This results in informal testing where the environment and other factors might not be controlled. To further enhance the experience phase, tests should be carried out in controlled environments in formal settings with a wide range of participants who have backgrounds in interior design.

Report of the findings

Most of the questions were multiple choice questions with yes or no questions and selecting from 1-5 to rate their agreement with the questions.

Pre-experiment questions:

This question was designed to understand the user’s background in order to figure out their specific knowledge of the field that this application is aiming for.

This question was designed to understand the user’s experience of using VR applications to determine their proficiency in using this technology as people with no experience might find this application challenging or vice versa.

Post-experiment questions:

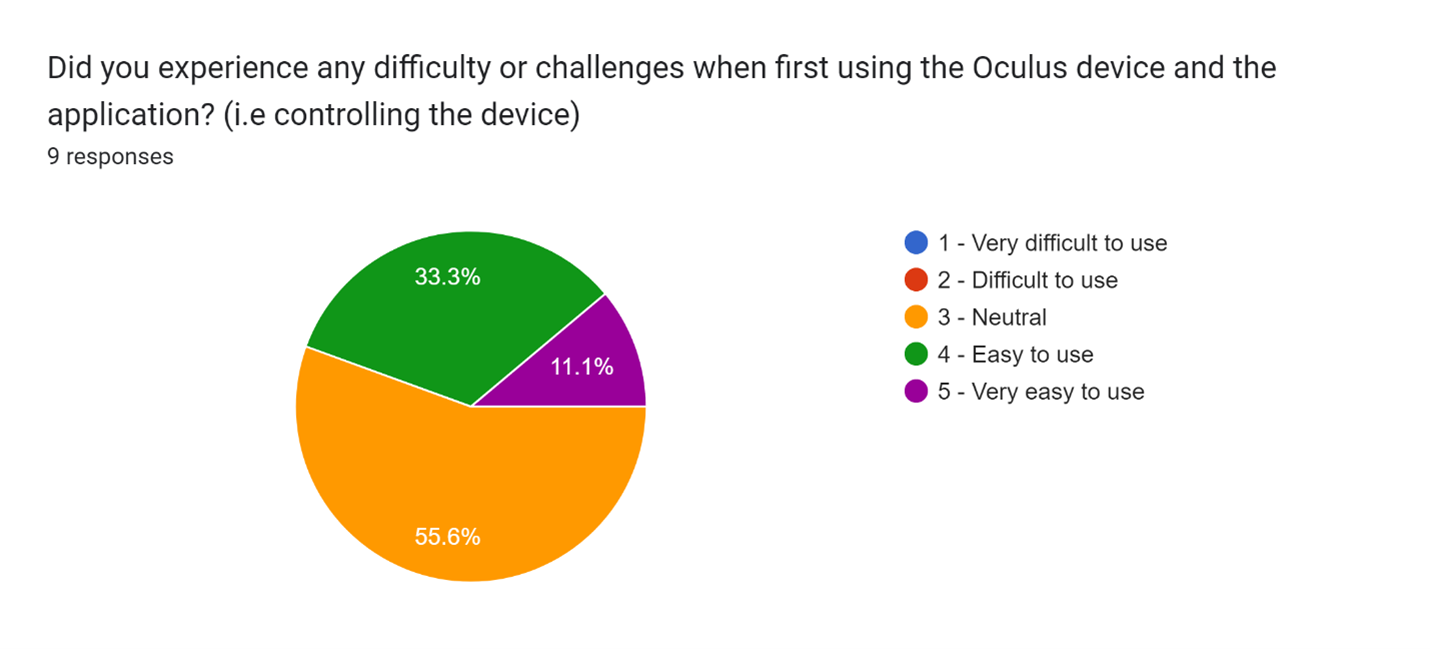

This question aimed to understand if there’s any difficulties that the user might find when using the VR headset, therefore, providing insight into the how comfortable VR is especially for using the application.

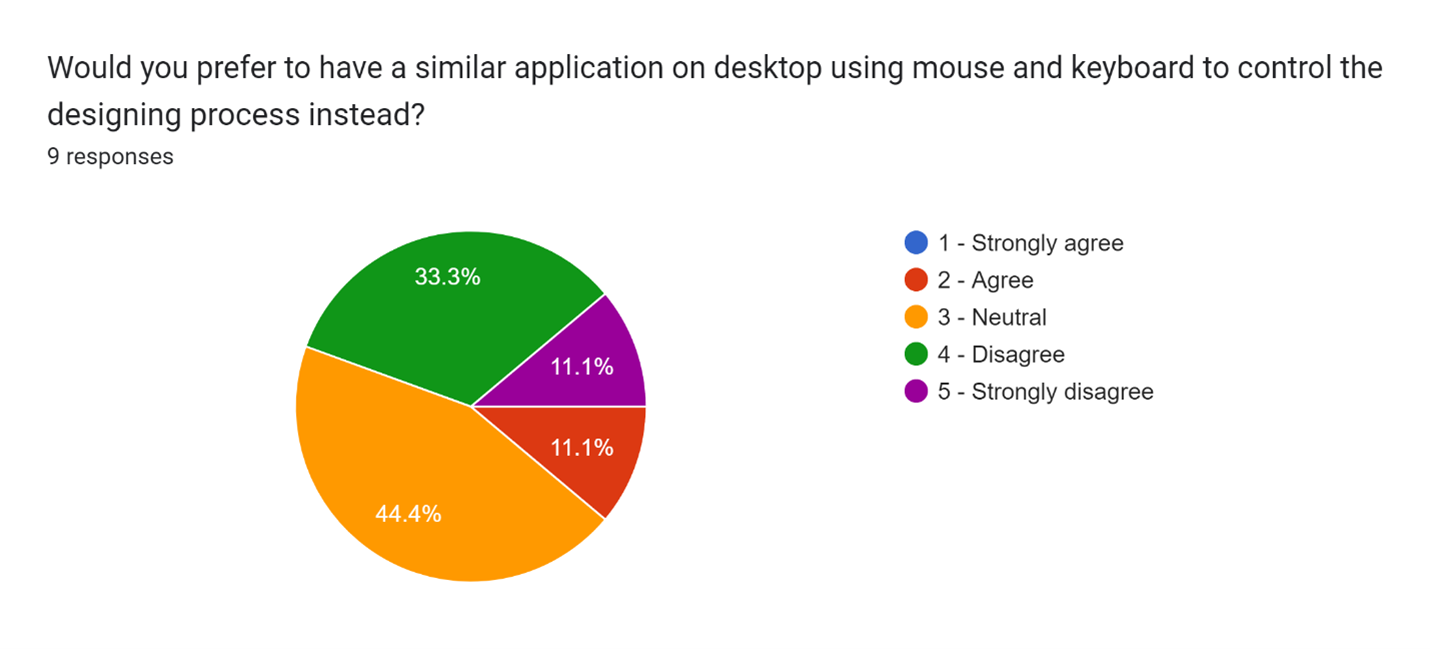

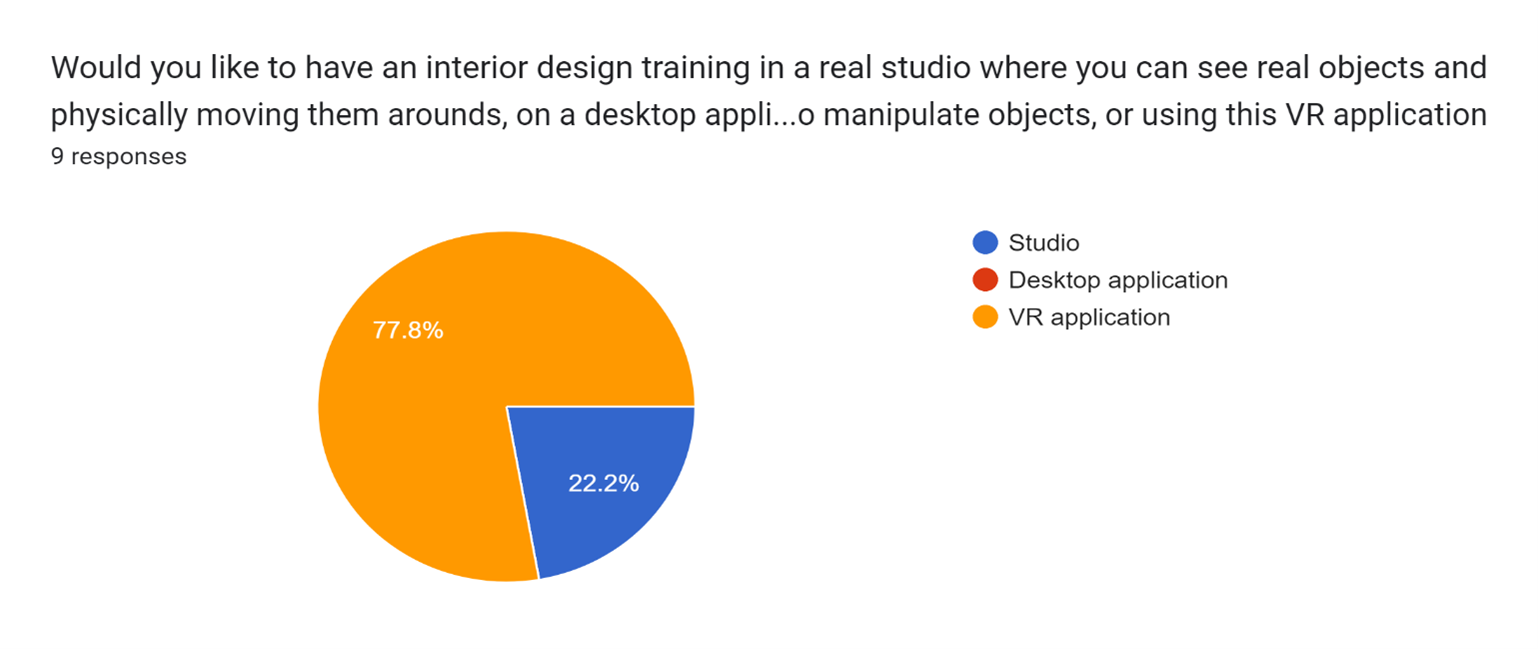

This question’s goal is to justify the use of VR applications over desktop applications where finding the testers' preference is important.

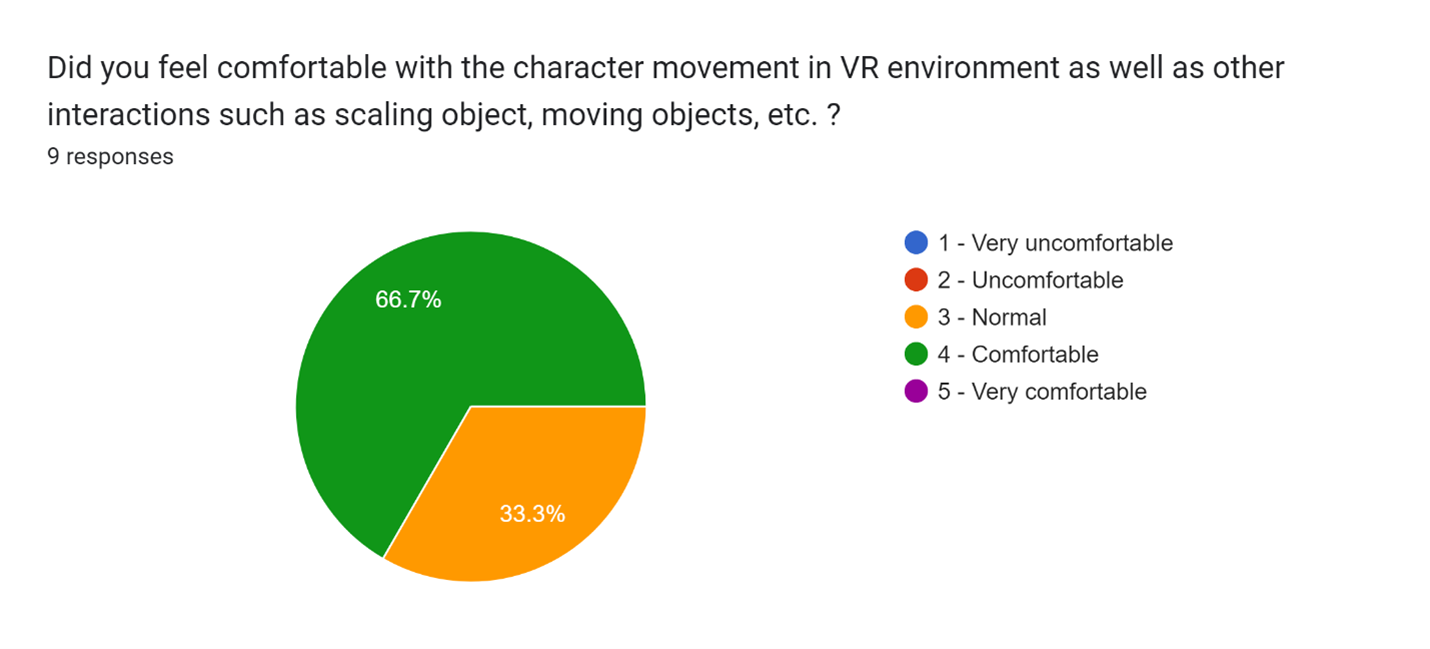

The question aimed to examine the VR character movement within the application’s scene as well as the functionality of other interactions introduced. The result showed that all of the testers did not experience any discomfort and seems like the application did not have any errors when they were using it.

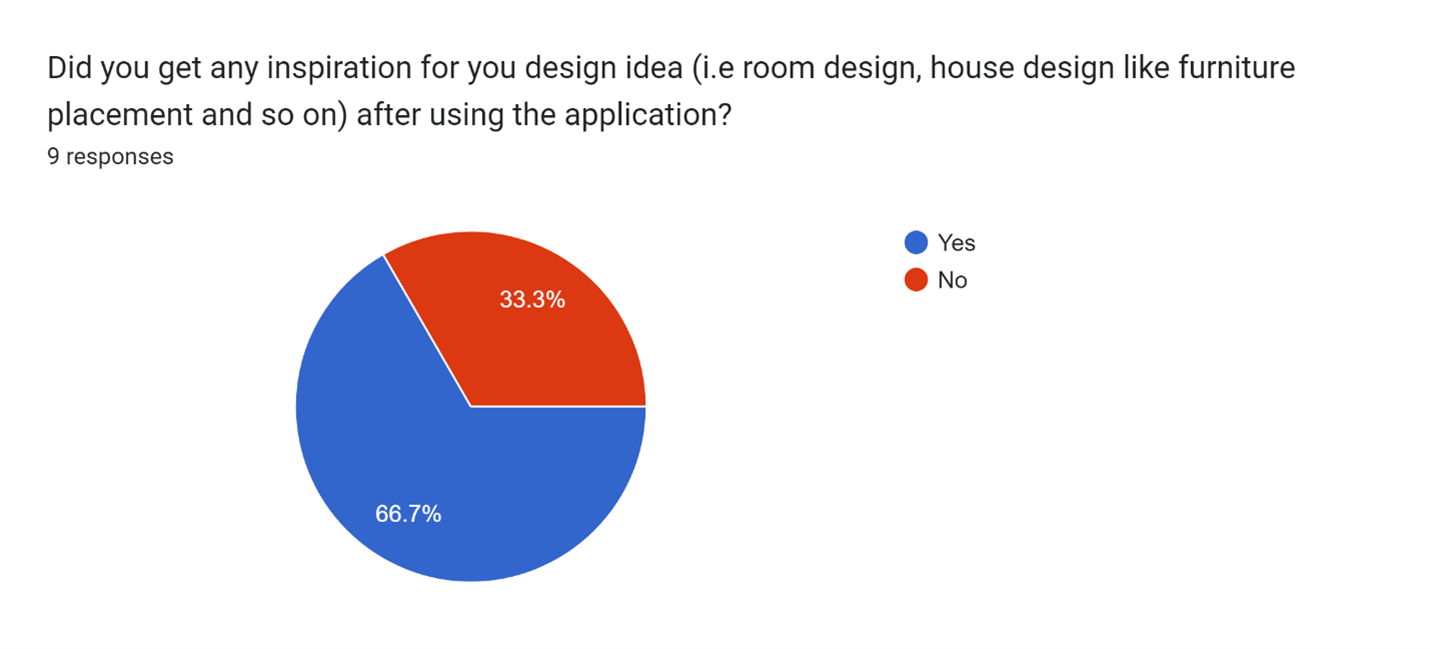

The goal of the application is to train interior designers, hence, being able to understand whether they got any value from using the application or not is important.

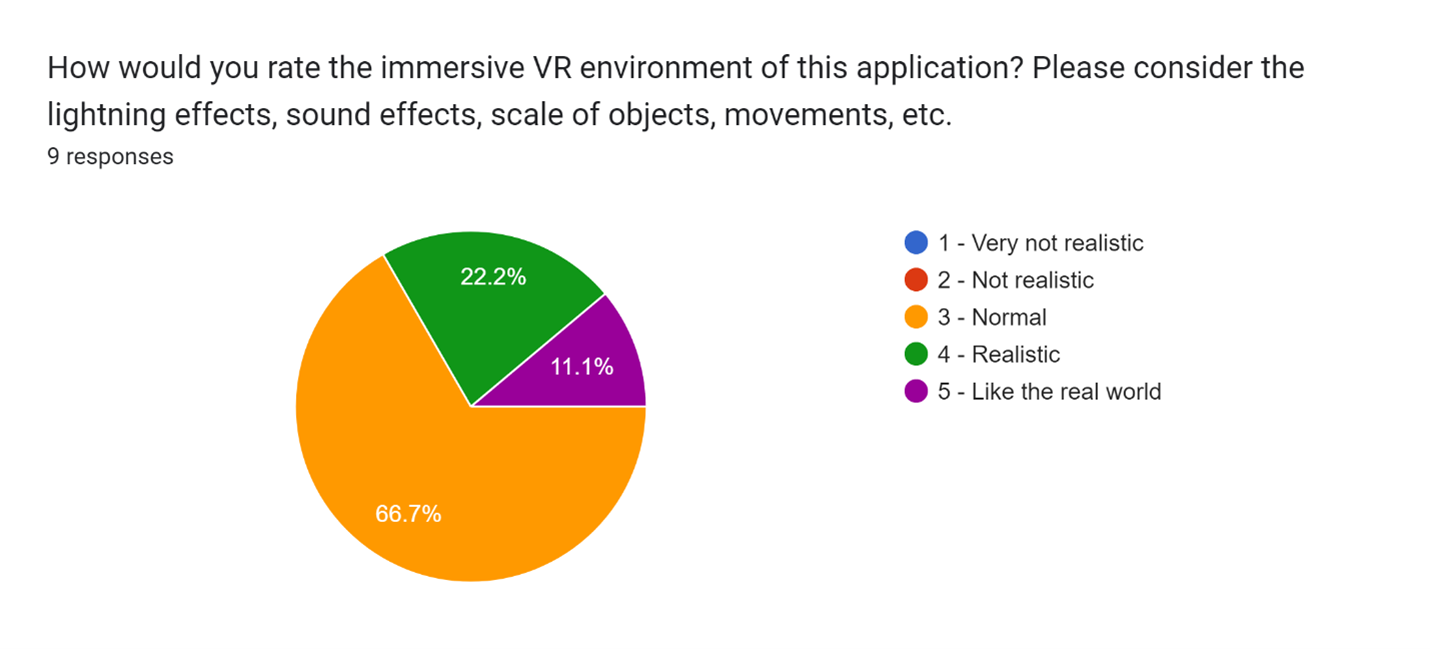

This question aimed to capture the user’s rating on the features of the application where lighting effects and movements of the in-game characters can reflect how immersive this application

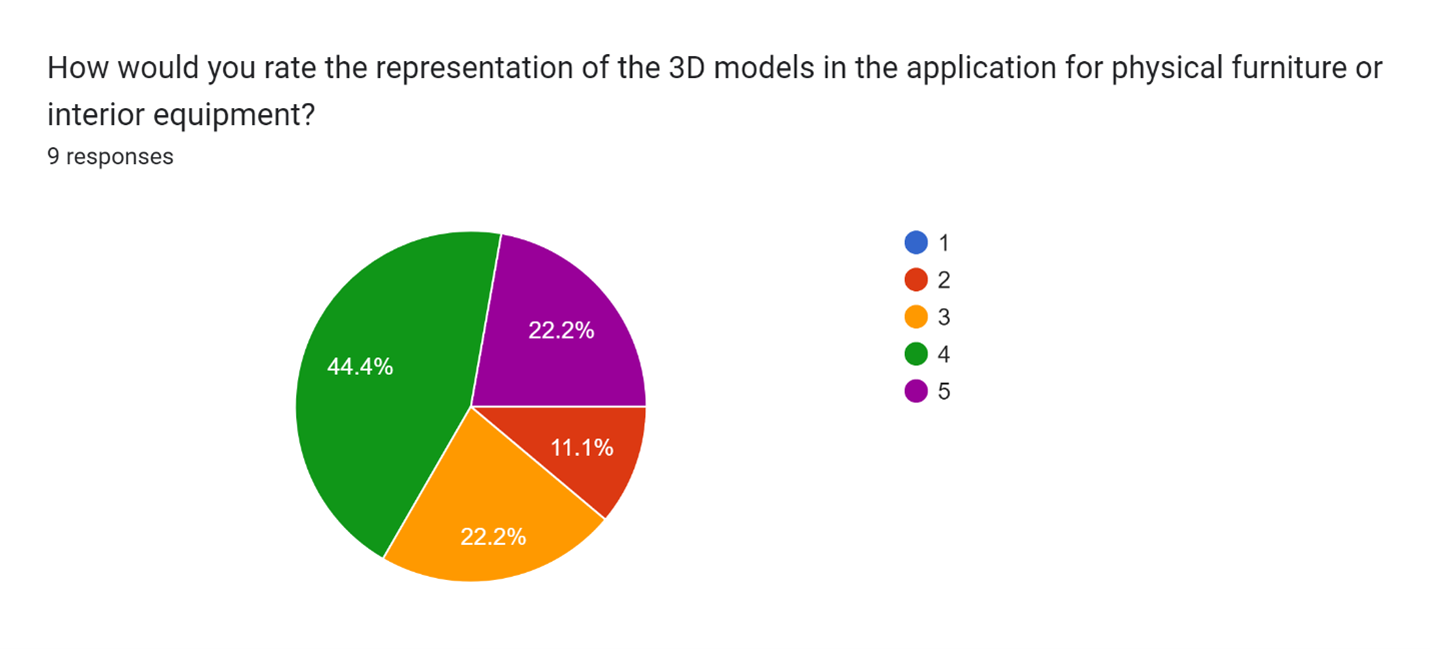

The question sought for the user’s perspective on the 3D models used within the application. Most of the responses showed that they highly rated the 3D models used within the application, hence, these models were effective.

This question goal was to find the user’s experience after using the application where the team would like to see if they preferred to use this training method over other traditional methods or not.

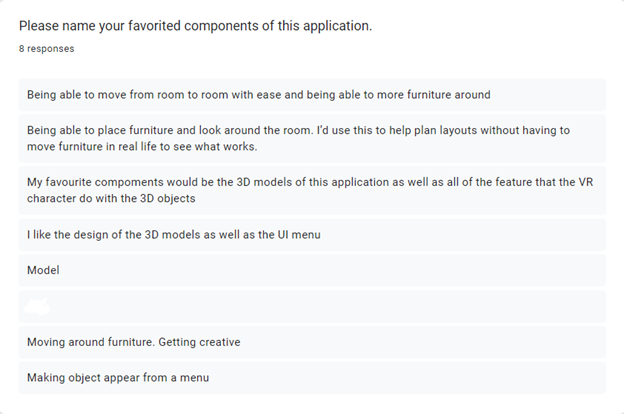

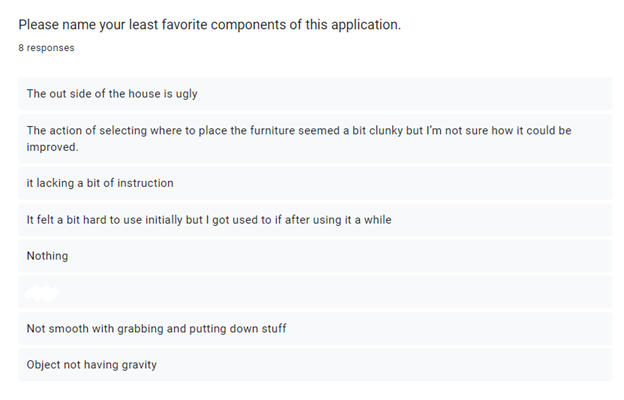

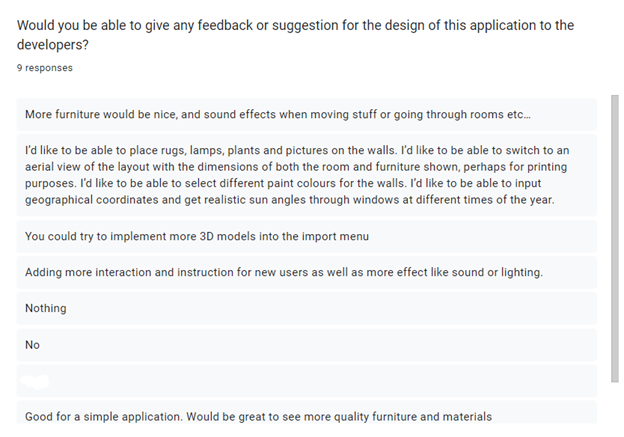

These long response questions aimed to understand the user’s favorite, least favorite and suggestion from using this application where it was valuable for further development of this application.

Analysis of the findings

Overall, the user’s feedback justified the use of the VR application for this interior design training where many users preferred or agreed with using the VR as an interface solution compared to other methods. The majority of them did not experience any difficulties in using the VR headset or controlling the character’s interaction and movement within the application, however, this might be influenced by the fact that most users had experience with using VR before.

The immersive experience that the application provided with interactions and models received positive feedback from the user, indicating the correct implementation and usage of 3D assets. Some users found difficulties in using the application due to the lack of in-game instruction. This could be a larger problem for people with no VR experience.

Many suggestions were made about introducing more interactions, functionality, realistic features, and 3D models offered within the application including sound effects and lighting effects. Especially, many participants pointed out that the application could have more 3D models to be used from the user’s menu.

Addressing Results of the Usability Testing:

Agile methodology was suggested to be implemented for further development of this project where development could be divided into smaller cycles for iterative testing (Coursera 2023). This ensures flexibility and adaptability if challenges arise.

Further development will focus on enhancing the interaction designs, especially the manipulating object's interaction as suggested from the usability testing. As this is an essential component of interior design in general, it is the priority to concentrate on improving it by making the interaction more natural and introducing object gravity.

Offering more 3D models of furniture, realistic features and more utility functionality like changing wall material will also be part of the development phase where many usability tests can be conducted to seek users’ feedback. Having a range of furniture models will introduce the range of options that the user can import, hence, enhancing the visual experience and creativity.

For an improved prototype, artificial lighting effects were developed by toggling the lamp model’s light on/off. There are also options for changing the light of the environment by changing the material of the skybox. Therefore, these features enhance the immersive experience of the design applications as lighting is a significant factor when designing.

Furthermore, to introduce the responsiveness of the application when used, a sound effect was introduced upon the user’s interactions and in the background to enhance the engagement of the user. The timer was implemented within the user’s menu to keep track of time spent, therefore, allowing space for introducing different design levels and keeping track of the sense of time spent.

Reference:

Non-academic

Theoretical Knowledge Vs Practical Application https://vesim.ves.ac.in/vesimblog/student-blog/185-theoretical-knowledge-vs-practical-application.html

Academic:

Coursera (2023) What is agile? and when to use it, Coursera. Available at: https://www.coursera.org/articles/what-is-agile-a-beginners-guide (Accessed: 27 October 2023).

NSW Government (no date) Usability testing, Digital.NSW. Available at: https://www.digital.nsw.gov.au/delivery/digital-service-toolkit/resources/user-r... (Accessed: 27 October 2023).

The Interaction Design Foundation (2023) What is usability testing?, The Interaction Design Foundation. Available at: https://www.interaction-design.org/literature/topics/usability-testing (Accessed: 27 October 2023).

Usability.gov (2013) Usability testing, Usability.gov. Available at: https://www.usability.gov/how-to-and-tools/methods/usability-testing.html (Accessed: 27 October 2023).

A. Racz and G. Zilizi, "VR Aided Architecture and Interior Design," 2018 International Conference on Advances in Computing and Communication Engineering (ICACCE), Paris, France, 2018, pp. 11-16, doi: 10.1109/ICACCE.2018.8441714.

Cao, K. and Li, L., 2019, October. Research on the application of VR technology in interior design. In 2nd International Conference on Contemporary Education, Social Sciences and Ecological Studies (CESSES 2019) (pp. 745-748). Atlantis Press.

Nussbaumer, L.L. and Guerin, D.A. (2000), The Relationship Between Learning Styles and Visualization Skills Among Interior Design Students. Journal of Interior Design, 26: 1-15. https://doi.org/10.1111/j.1939-1668.2000.tb00355.x

Kaleja P. and Kozlovská M. (2017) Virtual Reality as Innovative Approach to the Interior Designing. Selected Scientific Papers - Journal of Civil Engineering, Vol.12 (Issue 1), pp. 109-116. https://doi.org/10.1515/sspjce-2017-0011

Assets:

https://assetstore.unity.com/packages/3d/props/furniture/furniture-set-free-242389.

https://assetstore.unity.com/packages/3d/environments/urban/modular-house-pack-1-236466

https://assetstore.unity.com/packages/3d/props/furniture/furniture-free-pack-192628

https://assetstore.unity.com/packages/3d/props/clothing/bed-pbr-227070

https://assetstore.unity.com/packages/tools/input-management/simple-vr-teleporter-115996

Polygon/Low Poly Materials - Lite | 2D Textures & Materials | Unity Asset Store

Get Interior Design Training VR Application

Interior Design Training VR Application

More posts

- KIT208 Assignment 4 Report_Group 4Oct 08, 2023

Leave a comment

Log in with itch.io to leave a comment.